In an unexpected move, OpenAI has shut down its AI text-detection tool due to concerns over its low accuracy rate. This tool, primarily designed to discern between AI-generated and human-written text, struggled with effectively carrying out its function, leading to its discontinuation. OpenAI plans to return with an enhanced version that addresses these shortcomings.

✅ AI Essay Writer ✅ AI Detector ✅ Plagchecker ✅ Paraphraser

✅ Summarizer ✅ Citation Generator

Key Takeaways:

- OpenAI has shut down its AI text-detection tool due to its low accuracy rate.

- The tool struggled to accurately differentiate between AI-generated and human-written text.

- OpenAI plans to develop an improved version of the tool that addresses its limitations.

- The company also commits to extending detection capabilities to audio and visual content.

- The need for reliable AI detection tools is evident given the risk of AI-enabled misinformation campaigns.

The Discontinuation of OpenAI’s AI Text-Detection Tool

OpenAI, a prominent name in artificial intelligence research, introduced an AI text-detection tool in January 2023. This tool was intended to help identify whether a piece of written content was generated by a human or an AI, specifically their AI chatbot, ChatGPT. The company outlined in a paper released the same month in collaboration with Stanford and Georgetown University faculty:

“Generative language models have improved drastically, and can now produce realistic text outputs that are difficult to distinguish from human-written content.”

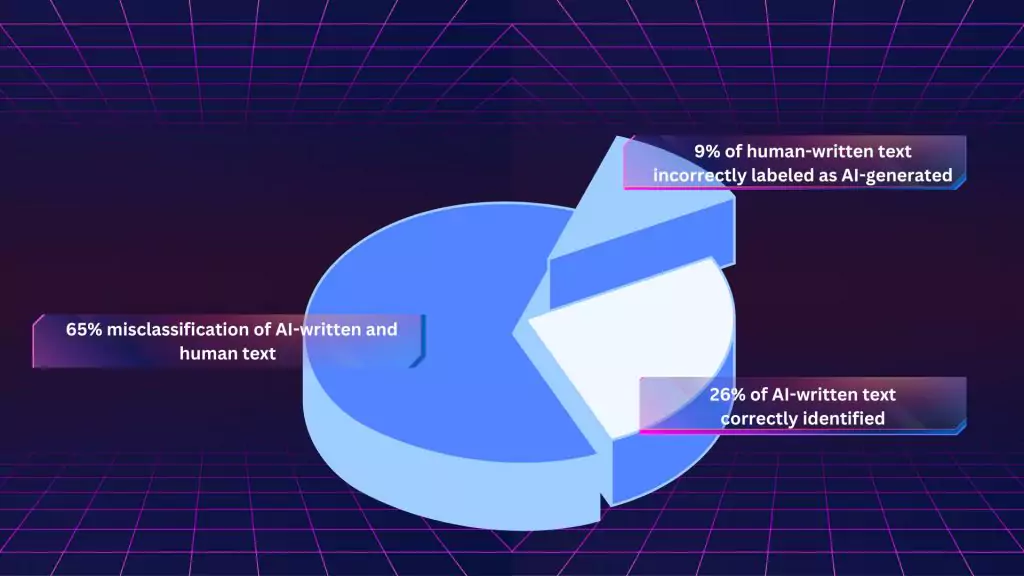

The tool, unfortunately, failed to meet expectations due to its low accuracy. It required users to manually input a text of at least 1,000 characters, a cumbersome process for many potential users. Furthermore, it only successfully identified AI-written text as “likely AI-written” 26% of the time, while falsely labeling human-written text as AI 9% of the time. Such a low rate of accuracy undermined the tool’s purpose and efficacy, hence leading to its discontinuation.

OpenAI acknowledged these limitations, not recommending the tool as a “primary decision-making tool.” Nonetheless, they had made it public, hoping that feedback and usage would help train and improve the system. The tool was officially disabled on July 20, with no clear date for when the improved version will be available.

Despite the setback, OpenAI remains committed to developing an improved version of the text-detection tool. The company sees a strong need for such technology given the rise of generative language models and the potential for misuse. They also announced a commitment to extend the detection capabilities to audio and visual content, such as those generated with the Dall-E image generator. The enhanced version is expected to address the limitations of the discontinued tool, offering better accuracy and ease of use for detecting AI-generated content.

The A*Help asked the leading experts in the field of AI detection their opinion on the current situation and what this holds for the future.

Here is comment from Andrew Rains, Co-Founder at Passed.ai:

“I think Open AI shutting down their text classifier is indicative of just how difficult it is to train and maintain an AI model capable of accurately detecting AI text. At Passed.AI, we acknowledge that AI text detection is currently an imperfect science and, while we provide the most accurate AI detection within our product, we want to give educators as much data about a document as possible so that they don’t have to rely on AI detector alone. Our Flow Score, for example, gives the educator an indication for how naturally a document was written, and we can tell and show how the document’s text evolved over time. This, paired with the AI detection score, gives educators the most complete picture for how a document was created and by whom.”

Garrett form PlagiarismCheck.org, a plagiarism checker service, shares the following:

“OpenAI is one of the pioneering organizations in making AI accessible. It is quite concerning that they decided to close their AI detector due to low accuracy as they have extensive knowledge about AI generation and detection. Maybe they decided to focus on improving the generation capabilities. Besides, they still plan to watermark AI content together with Meta and Alphabet, and this solution can be much more reliable than their AI detector. AI detection is a long run, so our team constantly tests AI-generated texts from various models to tune our TraceGPT and provide the most accurate results. PlagiarismCheck will continue delivering a comprehensive solution, so our clients can be confident in AI or plagiarism presence. Besides, our team works on a solution that will help teachers to see more details about potential cheating in assignments.”

Jon Gillham, Founder at Originality.AI, can also contribute to the subject:

“This industry is challenging and we absolutely empathize with the team at OpenAI. As the creators of ChatGPT, we can imagine the considerable pressures they faced as being considered the source of “truth” within the application. The end result for them was a tool that tried to minimize (but couldn’t eliminate) false positives and as a result, gave up any capability at being useful for detecting AI-generated content. Developing an AI detection tool is not an easy task! It requires working through complex challenges as well as acknowledging that there is never going to be a 100% perfectly accurate solution, no matter what! Balancing the need for accuracy while avoiding the backlash of false positives is a delicate tightrope to walk, even more so for OpenAI than anyone else. We believe that both transparency and accountability are crucial in this industry, and we commend OpenAI for making the tough call to shut it down when its performance fell short of the desired standard.”

Here’s optinion of ZeroGPT, an AI-detector, on the situation:

“We believe that OpenAI’s decision might have been influenced by various factors. The development of AI tools for detection and monitoring must strike a balance between user benefits and potential risks. It is possible that OpenAI encountered challenges in ensuring the tool’s accuracy and effectiveness while minimizing false positives and negatives. Additionally, ethical considerations surrounding data privacy and usage might have played a role in their decision-making process. As for ZeroGPT, we are continually striving to improve our product to meet the needs of students and educators. We understand the importance of a reliable and efficient AI-detection tool that can aid academic integrity without unnecessary hindrance to the learning process. To achieve this, we are actively investing in research and development to enhance the accuracy and capabilities of ZeroGPT. User feedback and insights play a significant role in guiding our improvements.”

Understanding the Impact of AI in Detecting False Claims

In an era where misinformation campaigns are a pressing concern, AI detection tools like OpenAI’s can play a crucial role. Automated systems powered by advanced language models have the potential to propagate misleading content at an unprecedented scale. The OpenAI warns:

“For malicious actors, these language models bring the promise of automating the creation of convincing and misleading text.”

Examples of misuse could range from students using AI tools to cheat on assignments to far more serious instances, such as political interference via mass dissemination of AI-generated disinformation. Given the high stakes, the development of reliable AI detection tools is paramount. They not only help in identifying and debunking false claims but also contribute to the broader discourse on the responsible use of AI technologies.

The Verdict

OpenAI’s decision to discontinue its AI text-detection tool highlights the challenges in developing systems that can accurately differentiate between AI and human-generated content. While the tool had its limitations, it was a stepping stone towards a much-needed solution to the growing problem of automated misinformation. OpenAI’s commitment to refining and extending this technology signals a promising future in the fight against AI-enabled false claims.

Follow us on Reddit for more insights and updates.

Comments (0)

Welcome to A*Help comments!

We’re all about debate and discussion at A*Help.

We value the diverse opinions of users, so you may find points of view that you don’t agree with. And that’s cool. However, there are certain things we’re not OK with: attempts to manipulate our data in any way, for example, or the posting of discriminative, offensive, hateful, or disparaging material.