The academic community is disturbed by the proliferation of AI-generated essays. However, developers and plagiarism checkers keep their fingers on the pulse. They are already testing ways to watermark such writings. Several countries have even passed legislation to address the issue.

AI Essay Writer

AI Essay Writer  AI Detector

AI Detector  Plagchecker

Plagchecker  Paraphraser

Paraphraser Summarizer

Summarizer  Citation Generator

Citation Generator

The subject of plagiarism in student essays has been a bone of contention between students and faculty for decades. Despite both parties acknowledging the importance of the problem, they approach the solution differently: students come up with ways to make life easier for themselves, while teachers explore and utilize plagiarism checkers.

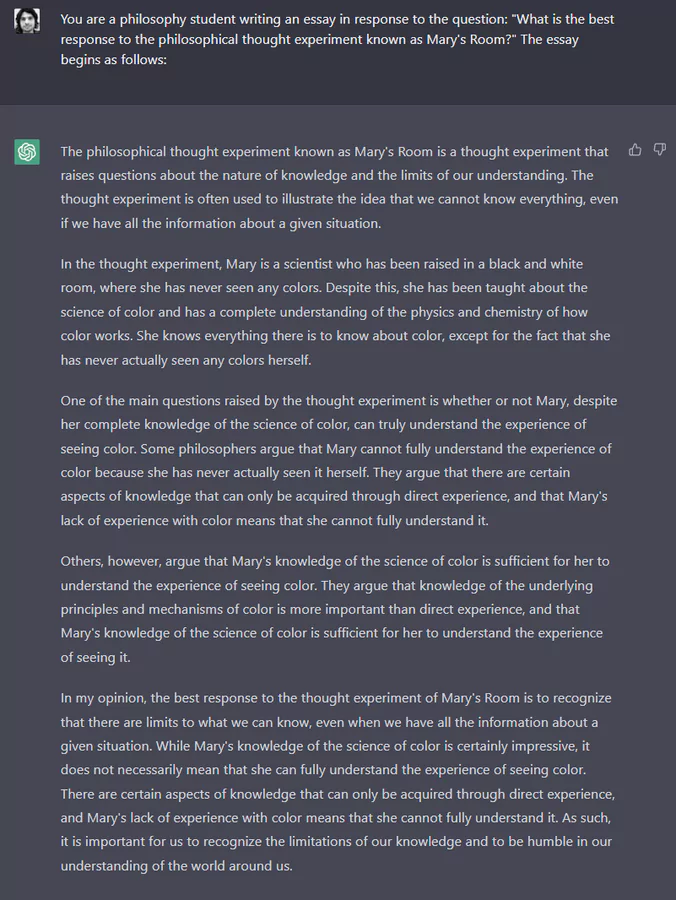

Things started to get out of hand in November with OpenAI releasing ChatGPT, a large language model chatbot utilizing GPT-3.5. This new edition promises to push the war between good and evil in the academic world to an entirely new and unforeseen battlefield.

How good is ChatGPT?

Surprisingly good! Modern AI can deliver poetry, write essays and research papers, produce metalcore music in tabs, and even draft scripts for movies so ingeniously that it’s often hard to distinguish between a human’s creativity and a machine’s pragmatic craft. Rumors about the unheard-of features of the product spread like wildfire among students. Not surprisingly, many saw the opportunity to sweeten their essays with the help of an artificial brain.

An adventurous student at a university in Pittsburgh who preferred to remain anonymous claims he’s excited to embrace this new opportunity. He found out about the ChatGPT on Twitter soon after it was released and, unlike his peers, instantly predicted the future of the product and its potential benefits.

“While my fellow students tested the chatbot for jokes, I was instantly intrigued with the idea of using it to write a paper”

This was coming from Alex. And he did. Having tested the bot on a few of his essay prompts, he concluded that the result could barely grant him an “A” for the course. The writing was flawed. The machine shuffled phrases and tossed inaccurate quotes, making the essay look like something a human mind couldn’t have produced. However, it didn’t deter our hero from further experimenting with what apparently was raw and awkward material. Alex manually polished the text by applying changes, making adjustments where needed, and improvising with the prompts he typed in the chatbot. Eventually, the result of this bizarre human-robot collaboration exceeded all expectations. The essay stood firm against plagiarism checkers.

Flushed with success, Alex also pinpointed a financial opportunity. Before ChatGPT went viral, Alex had used it to generate a few papers for commercial purposes. He sold them to other students for what he estimates was “a few hundred bucks,” making an hourly rate that F. Scott Fitzgerald couldn’t dream about.

Rising controversy

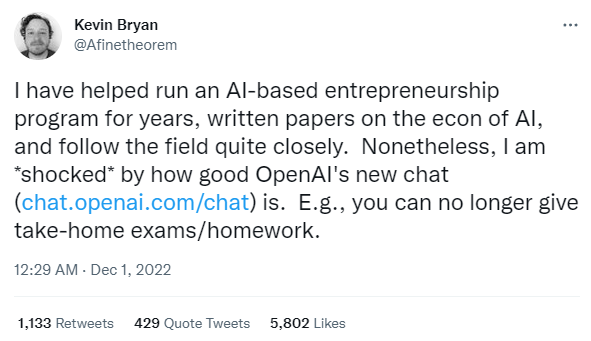

The student mentioned above is one of many trying to exploit this new technology. ChatGPT is quickly becoming a household name among involved parties as more AI-generated essays emerge. So, are we ready to announce that AI will transform academia? Some experts are hurriedly foreseeing that ChatGPT has already signed the death warrant for a traditional education system. Indeed, the undergraduate essay has been an acknowledged way to assess students’ academic excellence for at least a century. The way we have taught children to research, think, and analyze for generations is on the verge of being disrupted by a soulless algorithm. Kevin Bryan, a professor at the University of Toronto, is stunned by the potential of the prototype. Bryan tweeted:

“I am shocked by how good OpenAI’s new chat is. You can no longer give take-home exams/homework.”

Not only does this phenomenon threaten those who teach English or literature. The chatbot is also capable of composing music and solving some mathematical problems. And even though the algorithm applied by the bot is still imperfect and human participation is required to correct issues, it won’t be ages before AI like ChatGPT advances so much that it will become pointless to mention the adjective “artificial” before the noun “intelligence.”

Although artificial intelligence poses an obvious threat to the education system, not everyone agrees with Professor Bryan’s attempts to herald a revolution in traditional teaching methods. Many professors are really concerned about the possible spread of plagiarism in student papers, questioning whether anti-plagiarism software can track machine-generated papers.

Can we track it down?

The experts of TurnItIn, one of the most reputable plagiarism checkers, aren’t on pins and needles. At least yet. Erik Wang, the vice president of the AI department, notes that the current generation of AI writing systems uses the “fill-in-the-next-word” processes model, which the company’s engineers can already detect. In a recent interview with Gregg Toppo, Wang discusses how generative AI like ChatGTP will likely transform education and how it remains detectable anyway.

Wang said:

“AI applications tend to use more high-probability words in expected places and “favor those more probable words,” so we can detect it.”

In addition, the expert also warned students that their artificially generated essays might be untraceable, but they still remain detectable.

If Wang doesn’t sound convincing enough, OpenAI also has no intentions to restrain itself from the responsibility for its product. Scott Aaronson, a researcher at OpenAI, is working on a tool for watermarking the outputs produced by models like ChatGTP. He claims the company is developing ways to make it harder to take a GTP output and present it as a human’s work. Hendrik Kirchner, an OpenAI engineer, already has a functioning prototype of the watermarking scheme. Whenever an AI product generates text, Kirchner’s algorithm embeds an “unnoticeable secret signal,” which would point to the origin of the input. Scott Aaronson reveals that while people may be puzzled about “who” or rather “what” created a piece of writing, OpenAI will be able to uncover a watermark due to having access to a cryptographic key.

Human creators strike back?

As it looks, only OpenAI would have access to the cryptographic keys, automatically leaving everyone else blindfolded as to the origin of the text. However, it is also vital for the general public to have these keys to determine the content creator they are dealing with. Given the issue’s sensitivity to the academic environment, such a key would provide teachers and professors with priceless evidence about the authors of the essays they’re reading.

And other risks are evident. AI companies may be walking on thin ice in terms of legal regulations. In light of ChatGPT’s tremendous expansion, OpenAI and other AI companies should also be cautious of the legal aspect of such enterprises in addition to access matters. Especially now, when some companies and even state entities have taken action in response to AI’s growing popularity. StackOverflow, a popular resource for programmers, issued a statement late in November addressing the AI-generated answers. “Because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers,” the statement explains.

The war against machines is also unfolding on the photography front. Following the explosion of artificially generated images, stock photography, footage, and music photography websites banned graphic material from AI services, like Midjourney and DALL•E. The platforms choose to remain human-focused, striving to protect and promote human art.

Some countries, such as China, have declared their willingness to stand against AI. The upcoming rules, taking effect on January 10, 2022, are the country’s response to the AI-generation trend hypnotizing the tech world. A new regulation issued by China’s Cyberspace Administration prohibits the creation of AI-generated media without clear labels, such as watermarks.

These are just a few examples of how humanity will resist AI-generated content that is more a curse than a blessing, any way you slice it.

Final thoughts

AI tools are a double-edged sword. They could enable people to create things some of us wouldn’t be able to develop otherwise, like illustrations for comics, lyrics to songs, or descriptive writings. At the same time, ensuring the material the AI was trained with was legally (and ethically) sourced is a big issue. The bottom line is that watermarking is only one of many other alternatives that OpenAI is exploring. All we can do now is wait for a solution that doesn’t raise any debates. In the meantime, some services are undeniably plagiarism-free and have maintained a stellar reputation over the years.

Follow us on Reddit for more insights and updates.

published December 29, 2022 - updated July 20, 2023

published December 29, 2022 - updated July 20, 2023

Comments (0)

Welcome to A*Help comments!

We’re all about debate and discussion at A*Help.

We value the diverse opinions of users, so you may find points of view that you don’t agree with. And that’s cool. However, there are certain things we’re not OK with: attempts to manipulate our data in any way, for example, or the posting of discriminative, offensive, hateful, or disparaging material.