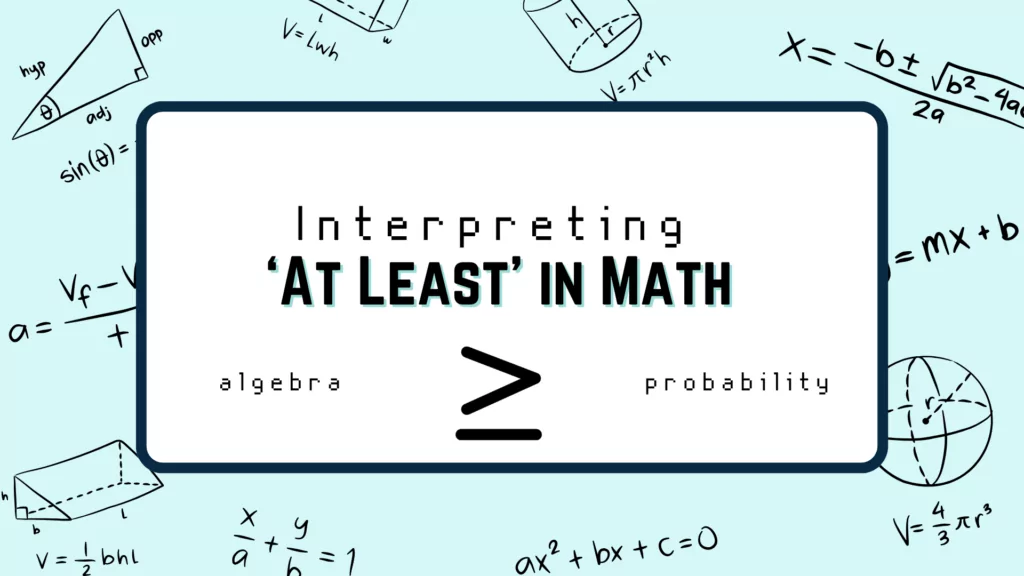

In mathematics, precise language and clear definitions are paramount to ensure accurate communication and problem-solving. Among the various phrases used, “at least” holds a significant place, often encountered in algebra, probability, and other mathematical contexts. This guide delves into the meaning of “at least” in math, its practical applications, and its relevance in problem-solving.

✅ AI Essay Writer ✅ AI Detector ✅ Plagchecker ✅ Paraphraser

✅ Summarizer ✅ Citation Generator

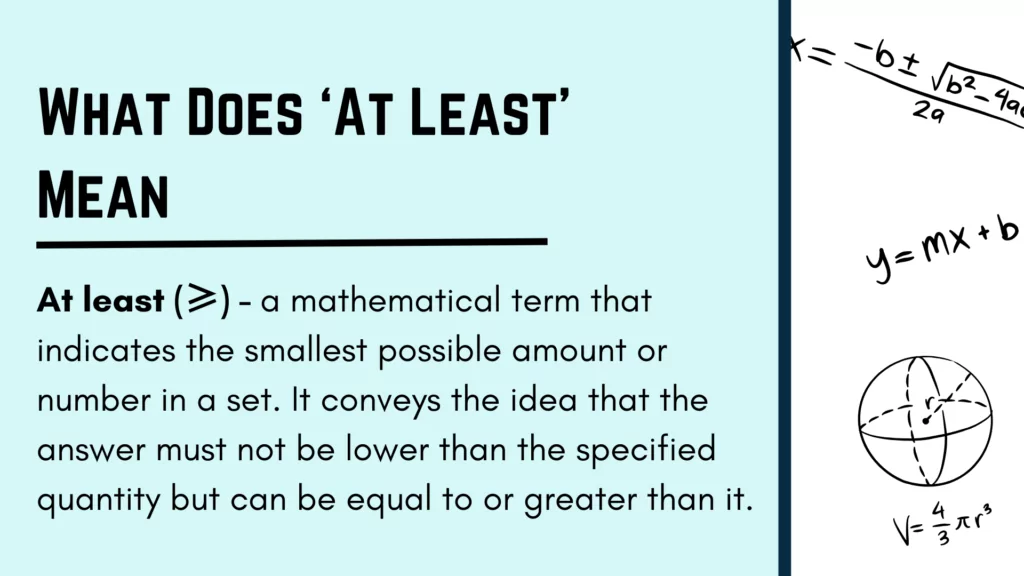

Understanding the Concept of “At Least”

“At least” is a mathematical term that indicates the smallest possible amount or number in a set. It conveys the idea that the answer must not be lower than the specified quantity but can be equal to or greater than it. In mathematical notation, “at least” is represented by the symbol (≥). So, even those who use math equation solver alot probably used this symbol in their equations at least once.

Consider the expression X ≥ 7. This means that the value of X must be at least 7. In other words, X can be any number that is greater than or equal to 7. Therefore, X can take on values such as 7, 8, 9, and so on.

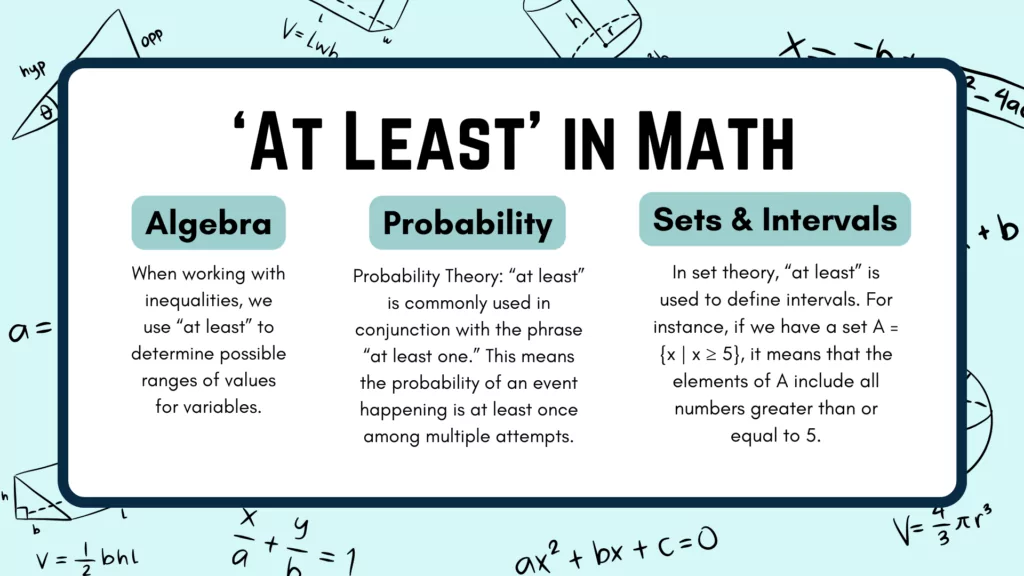

Probability is the branch of mathematics that deals with determining the likelihood of an event occurring. In probability theory, “at least” is commonly used in conjunction with the phrase “at least one.”

When we talk about “at least one” in probability, we are referring to the probability of an event happening at least once among multiple attempts. To calculate the probability of at least one occurrence, we use the complement rule: P(at least one) = 1 – P(none)

“At Least” in Practical Problem-Solving

- Real-Life Applications. The concept of “at least” is prevalent in various real-life scenarios. For example, in manufacturing quality control, it ensures that a certain percentage of products meet a minimum standard. In exams, students need to score at least a certain number of marks to pass.

- Algebraic Manipulation. Algebra often involves solving equations and inequalities where the notion of “at least” plays a crucial role. When working with inequalities, we use “at least” to determine possible ranges of values for variables.

Utilizing “At Least” in Sets and Intervals

Sets and Inequalities In set theory, “at least” is used to define intervals. For instance, if we have a set A = {x | x ≥ 5}, it means that the elements of A include all numbers greater than or equal to 5.

Interval notation provides a concise way to represent sets of numbers. For example, the interval [3, ∞) represents all numbers greater than or equal to 3.

Probability of Complementary Events

Events In probability, complementary events are those that are mutually exclusive and cover all possible outcomes. When dealing with “at least one” probabilities, we often consider the complementary event of “none” or “zero occurrences.” Using the complement rule, we can find the probability of an event occurring at least once by subtracting the probability of no occurrences from 1.

Conclusion

Understanding the concept of “at least” in mathematics is crucial for precise communication and problem-solving, especially when tackling complex challenges like the hardest math problem in a given domain. Its applications extend beyond theoretical concepts, finding relevance in probability, algebra, and real-life scenarios. By grasping the significance of “at least” and utilizing it appropriately, mathematicians and problem solvers can enhance their analytical skills and draw accurate conclusions in various mathematical contexts.

FAQ

Follow us on Reddit for more insights and updates.

Comments (0)

Welcome to A*Help comments!

We’re all about debate and discussion at A*Help.

We value the diverse opinions of users, so you may find points of view that you don’t agree with. And that’s cool. However, there are certain things we’re not OK with: attempts to manipulate our data in any way, for example, or the posting of discriminative, offensive, hateful, or disparaging material.