In times when AI writing tools are blooming, more and more students are turning to these solutions when faced with an unbearable amount of tasks. Thus, artificial intelligence developers are trying to meet the growing demand and improve the tools as much as they can. However, in some cases when people are trying to chase the growing need for such bots, the quality of AI essay writers tends to plummet.

✅ AI Essay Writer ✅ AI Detector ✅ Plagchecker ✅ Paraphraser

✅ Summarizer ✅ Citation Generator

Two of the popular AI writing tools are ChatGPT and Bard. Some do not really see the difference between them, since both AI writers can address most student questions. Still, we decided to put them to the test with a specific task and see if there is a definitive answer to the question of which is better: ChatGPT or Bard.

ChatGPT vs Bard: What’s the Key Difference?

For the naked eye, both ChatGPT and Bard serve seemingly the same purpose. Both services have an AI chat, where you can paste your questions or tasks, ranging from ‘what are the top 5 easy cocktail recipes’ to ‘write me a structure for an article about ChatGPT vs Bard’. The key difference between these two lies in the fact that ChatGPT is more oriented toward writing assignments, whereas Bard is a better pick for those in need of proper academic research.

A little spoiler: both platforms are going relatively toe-to-toe in terms of writing. However, just like any AI assistant, the output requires additional proofreading and tailoring to a specific assignment’s style and requirements.

| ChatGPT | Bard | |

| 💼Company | Open AI | |

| 🤖Model | 3.5 GPT version | LaMDA + Gemini |

| 📧Sign-in | Required to use with Email | Required to use with Google Account |

| 💰Free/Paid Use | Free Use +Premium Plan available | Free Use only Premium Plan available in the future |

| ✍️Writing Competence | Medium-quality academic essay with evident AI influence requiring additional formatting | Well-structured medium-quality academic essay with a variety of citations requiring a more personal touch |

| 🎯Best fit for | Swift text creation with minimal input | Text creation as well as more reliable research |

| 🌟A*Help Score | 62/100 | 67.5/100 |

Bard vs ChatGPT: First View

ChatGPT and Bard, developed by OpenAI and Google, respectively, have garnered significant attention for their ability to generate human-like text across various domains (education, in particular).

The interface of ChatGPT is user-friendly and accessible through a web browser. When you start using the platform you are greeted with a simple and clean text box where you can start typing your questions or prompts. The interface may also include a chat-like format, where the AI ‘responds’.

With Bard, it is pretty much the same; a text input area where users can start composing their content. The only major difference lies in the color palette. Both tools are sleek, intuitive, and straight to the point.

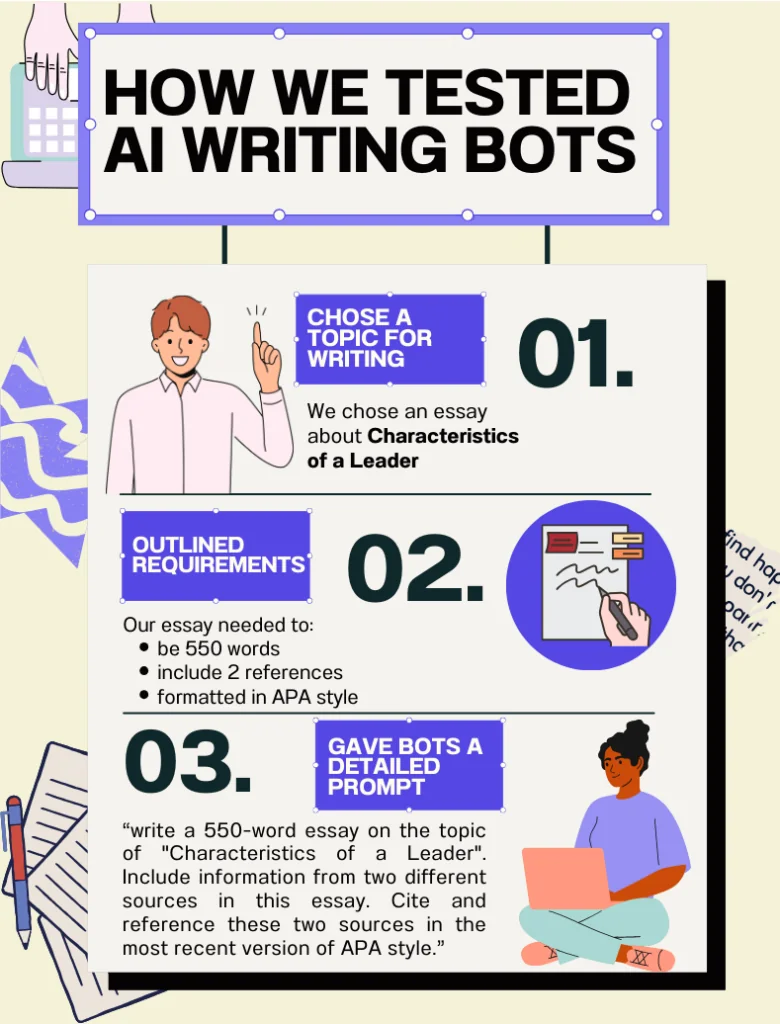

How We Did the Testing

For each service we review, a certain testing scenario is created. Our team tries to make sure to not only include the quality of the help itself but also replicate a typical student journey and evaluate the platform based on it. So, we pay attention to the value for money-aspect of the service, as well as the user experience on the platform, since they can significantly influence the overall impression.

Our evaluation comes to a breaking point when reviewing the actual quality of help. Each type of service requires its own set of rules so that the marks will genuinely reflect how well the platform performs. We understand that some people mostly employ the “out of sight, out of mind” tactic when dealing with a ton of unfinished assignments, but you don’t have to compromise on quality when doing so.

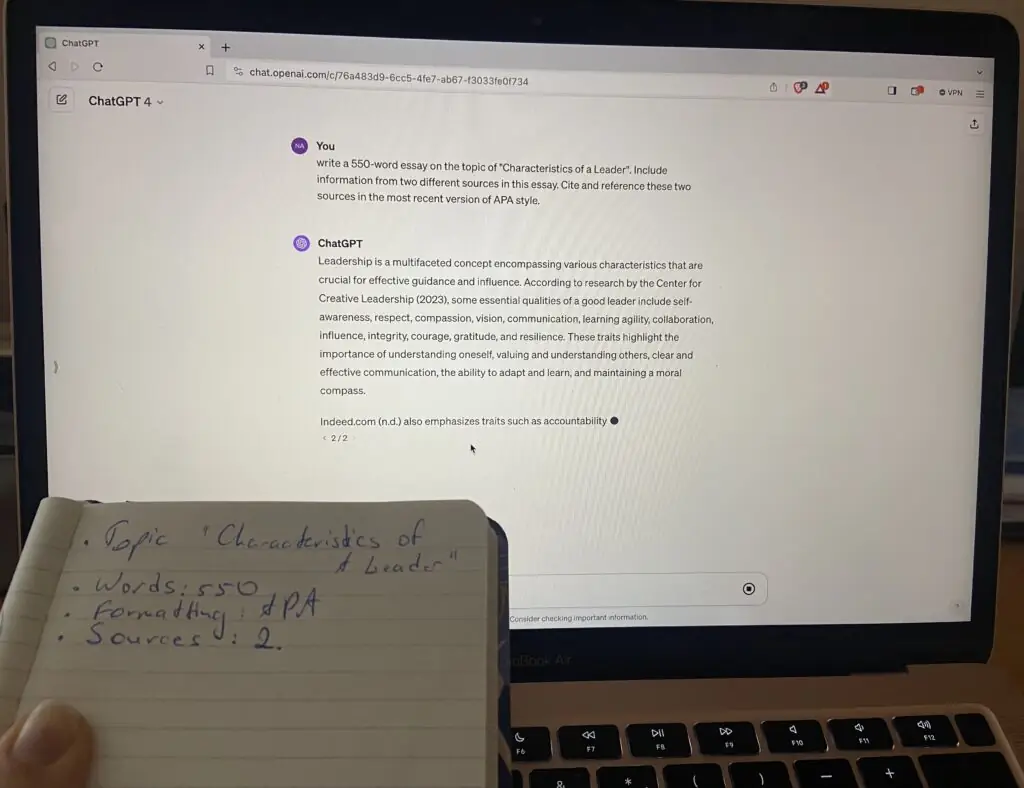

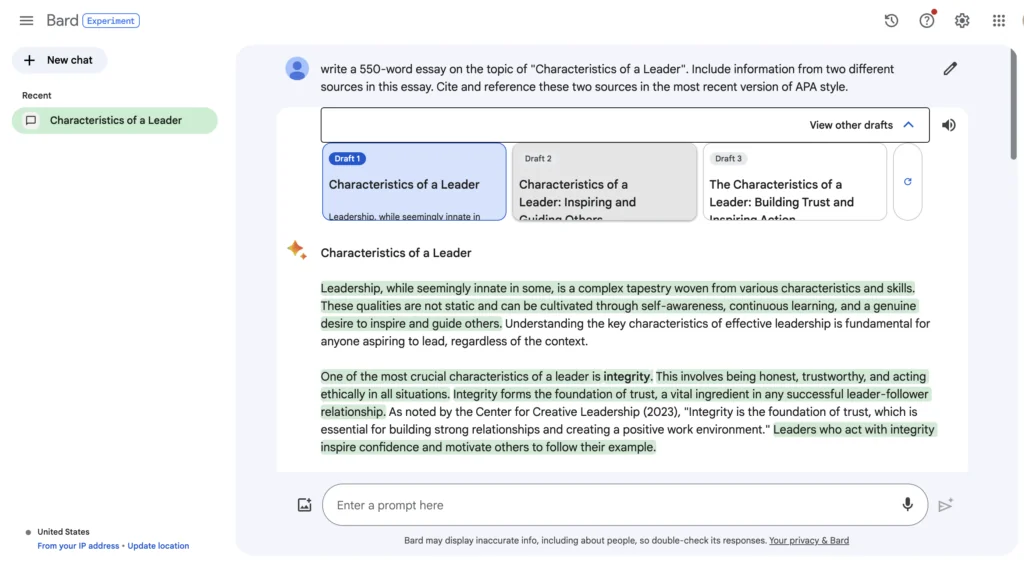

In the case of comparing ChatGPT and Bard, we asked to create a simple academic essay with certain specifics. You can see the detailed task in the picture below.

ChatGPT vs Bard: Results

Even if a tool is lacking in certain departments like web design and customer support, the quality of help is what matters in the end. Most students in need of a quick and easy solution to their problems are much more focused on the final outcome.

Generation flow: ChatGPT offers a smother work process

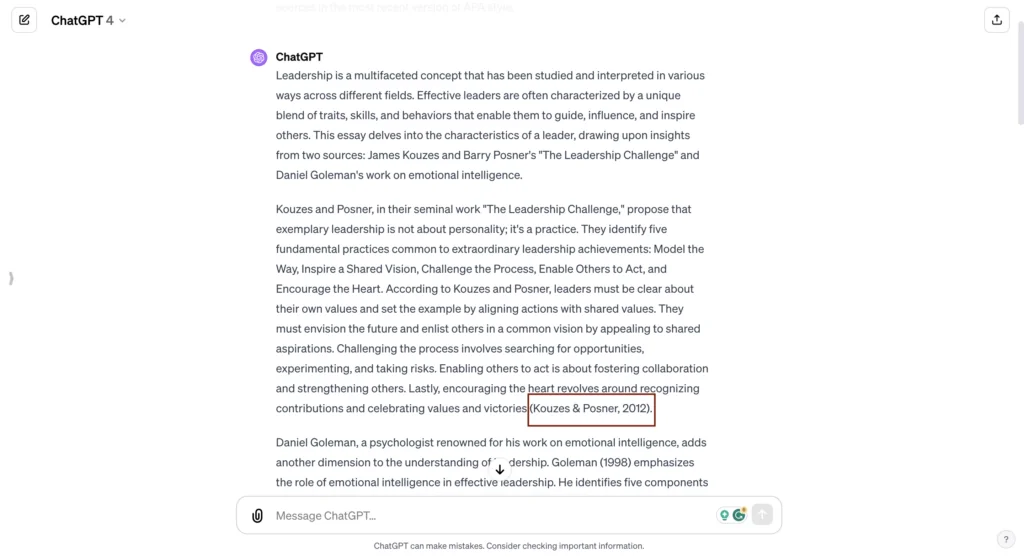

So, no more teasing, let’s get our hands on the results. Once again, we tasked ChatGPT and Bard to write an essay. From the first look, each essay didn’t have anything special about it, but we were here to dig deep.

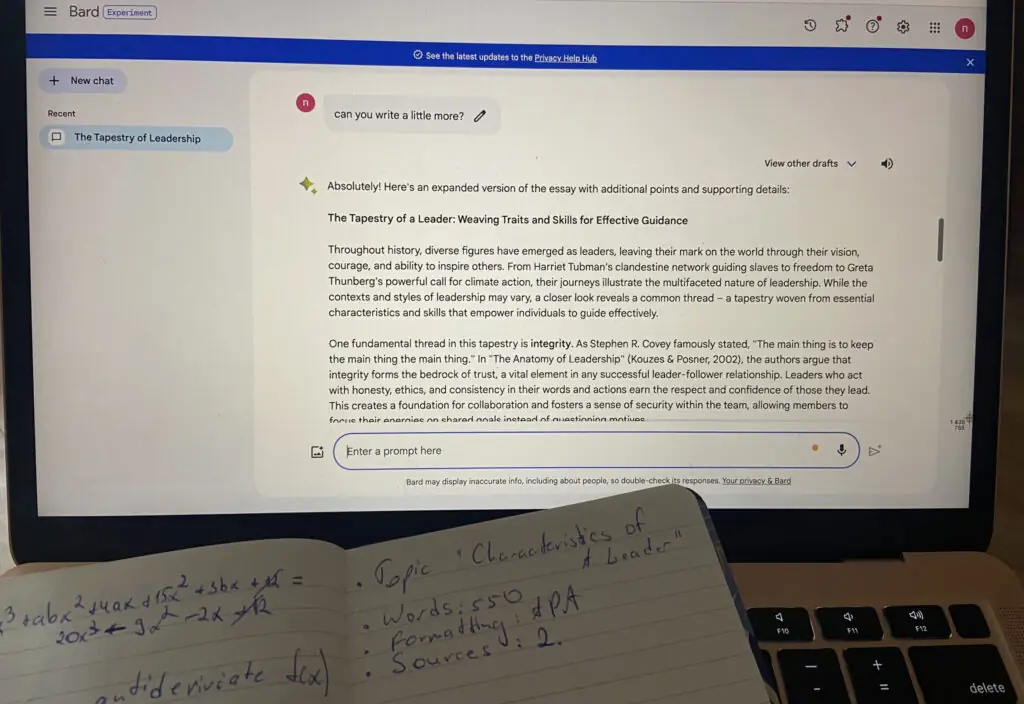

Talking about the overall generation flow we can say that ChatGPT impressed us a bit more; the whole process was much swifter and didn’t require any additional actions. In turn, Bard was…pretty much the same. The only thing we noticed was that while with ChatGPT you can get a complete essay in one or two steps, with Bard we had to ask it to expand the text a few times to reach the word limit. It isn’t a deal breaker, although a bit annoying when the deadline for the paper is pressing.

Referencing: Bard provides higher-quality references with better formatting

| ChatGPT | Bard | |

| Number of references included | 2 sources | 3 sources |

| Compliance with Citation Style | Decent, but not properly following APA 7th edition | Decent, but not properly following APA 7th edition |

Another major thing is referencing. We specifically asked both chatbots to include relevant sources in the most recent version of APA style in the text. ChatGPT got an underwhelming score and cited two sources (textbook and common academic article) just like we asked. One of the most prominent features of APA 7th edition is the italicization of certain elements like the name of the source; ChatGPT didn’t handle it well and decided to italicize the whole citation.

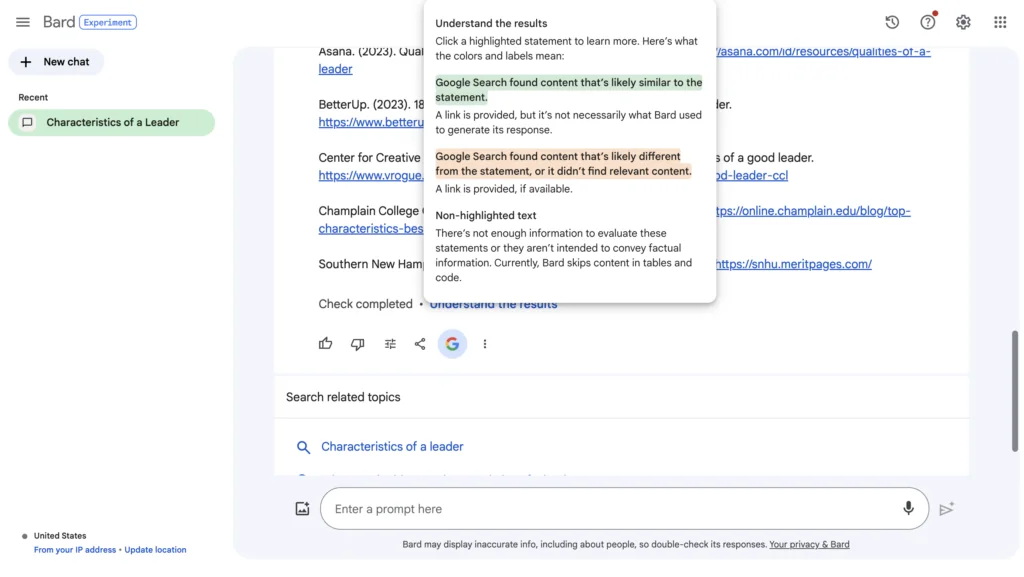

Bard, on the other hand, provided us with three sources (also with misitalicized words). Since Bard has shown to be more fitting for academic research, we were a bit startled to see three surface-level articles in the reference list, even though there are a lot more ‘serious’ materials available.

Generally, ChatGPT handles longer tasks easier

| ChatGPT | Bard |

| 568 words | 544 words |

One of the things on the list with the tag ‘extremely relevant’ when it comes to writing essays, is the word count. Sometimes, if you are unfortunate enough to come across a highly demanding educator and you don’t reach the word count, it can severely influence your course mark.

Between our two contestants, ChatGPT takes the prize in terms of getting to the required word limit and even exceeding it by almost twenty words. With Bard, it was more complicated, since, as we’ve already stated, it needed several reassuring ‘continue writing’ messages. Even then we were short by six words.

Professor’s Evaluations

| ChatGPT | Bard |

| 30.9/100 | 37.4/100 |

As much as we would want our review alone to be as precise and objective as possible, it is always great to get an external opinion. Especially if that opinion is provided by a qualified professional, like an American professor with a Ph.D., who can evaluate each and every aspect of the generated paper under an expert lens. Based on our professor’s review, the results are extremely underwhelming, with both services not even reaching 40 points out of 100.

What did exactly trigger such low scores? Let’s look at both essays separately.

For ChatGPT, the professor noted that there were several formatting issues, including problems with vertical and horizontal spacing, line spacing, reference formatting, and page breaks. The essay was also criticized for the excessive use of italics in references and highly redundant writing. While the document maintained integrity and met length requirements, mechanics such as spelling, grammar, punctuation, and word choice needed improvement. Citation formatting and reasoning/logic also received low scores, with issues related to in-text citations and the quality of scholarly references.

We’ve also testeted GPT-3.5 version, to see whether you could really create good essays for free. You can check out our findings here.

On the other hand, Bard’s essay demonstrated slightly better document formatting but still required improvement in areas like vertical and horizontal spacing, line spacing, reference indent, and page headers. Similar to ChatGPT, Bard’s essay was criticized for excessive italics in references and redundant writing. The document maintained integrity but fell slightly short in length. Mechanics, including spelling, grammar, punctuation, and word choice, received higher scores compared to ChatGPT. However, citation formatting was severely lacking, with issues related to authors and in-text citations. The reasoning and logic aspect showed improvements in terms of acuity, clarity, and objectivity but still had room for enhancement in efficiency.

| ChatGpt’s Essay | Bard’s Essay | |

| Integrity | 100% | 100% |

| Length | 100% | 96% |

| Spelling | 90% | 89% |

| Grammar | 66% | 100% |

| Punctuation | 93% | 97% |

| Word Choice | 74% | 92% |

| Citation formatting | 30% | 0% |

| Efficiency | 6% | 6% |

| Acuity | 6% | 53% |

| Clarity | 31% | 40% |

| Objectivity | 33% | 43% |

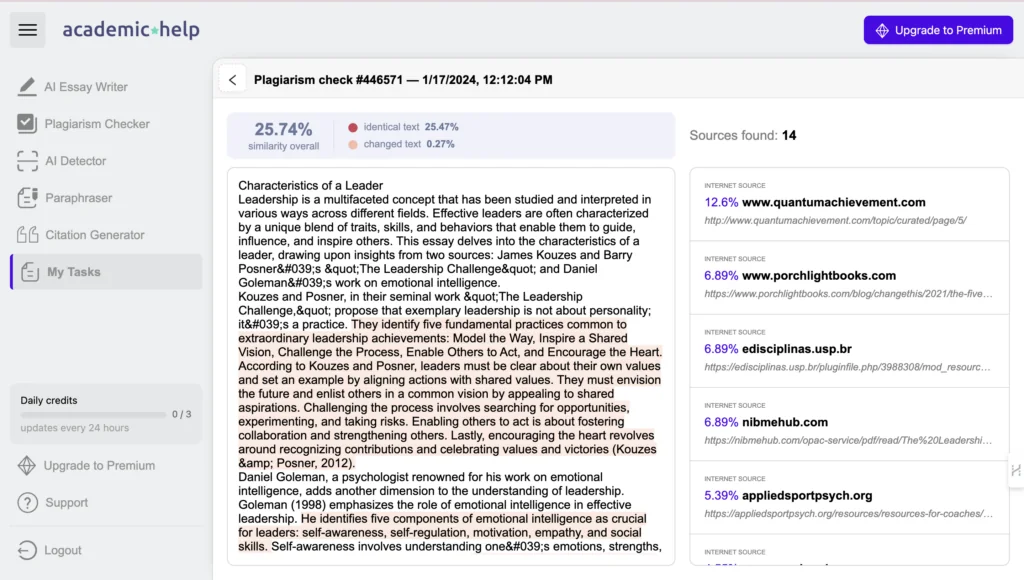

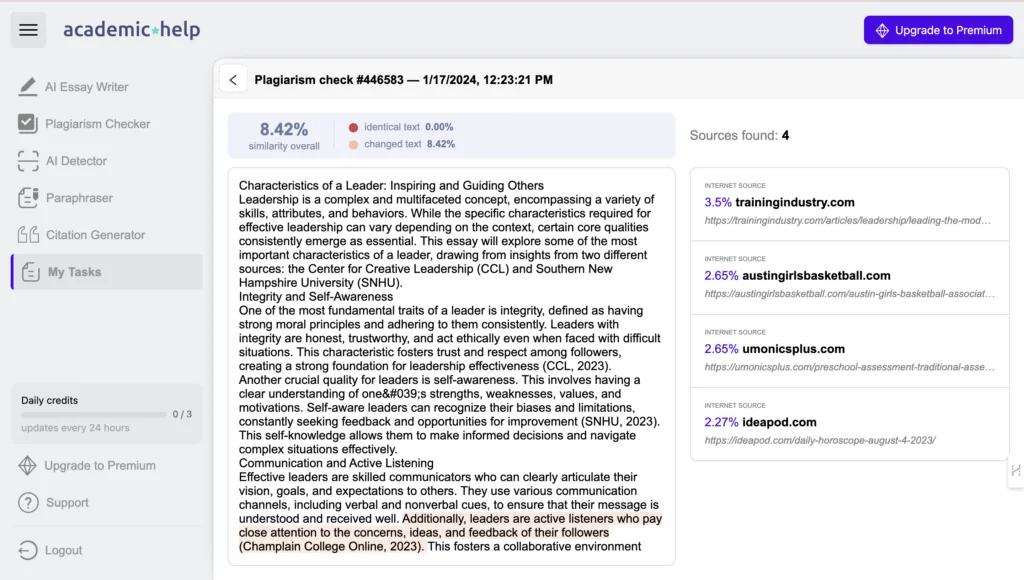

| Plagiarism Check | 25.74% | 8.42% |

In summary, both essays had their strengths and weaknesses in various areas, and improvements were recommended for both in terms of APA style, formatting, and citation quality.

Essay by ChatGPT

Essay by Bard

Plagiarism check of the essay by ChatGPT

Plagiarism check of the essay by Bard

The Verdict

Well, it was a very tough choice… According to the A*Help score, we can say that Bard snatched the medal right out of ChatGPT’s hands! Both services are very handy for students, educators, and other creators, but Bard performed better in terms of essay quality. The only major downside was the fact that we had to input the prompt several times to reach the word count. Nevertheless, no matter which tool you choose, we would advise you to always double-check everything and proofread your work before sending it to a professor.

We’ve also checeked a few more major players among AI chat bots. You can check out our investigations of Claude and Bing as well!

FAQ

Follow us on Reddit for more insights and updates.

Comments (0)

Welcome to A*Help comments!

We’re all about debate and discussion at A*Help.

We value the diverse opinions of users, so you may find points of view that you don’t agree with. And that’s cool. However, there are certain things we’re not OK with: attempts to manipulate our data in any way, for example, or the posting of discriminative, offensive, hateful, or disparaging material.